I, Robot (short stories 4-5)

Welcome to the October issue of Hacker Chronicles!

This month I continue the review of Isaac Asimov's short story collection I, Robot.

Happy Halloween by the way! Every year I wonder if kids will go back to tricks at all or if it's all treats from hereon? Fiction about and for kids often features practical jokes and messing with grownups. I kind of miss it. 😬

/John

Writing Update

End of October not only means Halloween but also getting ready for National Novel Writing Month, NaNoWriMo! I recently listened to a podcast episode with British author Elizabeth Haynes who writes thrillers. She starts all her published work during NaNoWriMo which is so inspiring to hear. If you're at all interested in writing a novel, you should join and challenge yourself. You can be my writing buddy too — here's my profile.

November is an excellent month for writing, at least in the Northern Hemisphere. I'll be working on my second novel of course.

Speaking of which, I've now completed the largest TODO item in the rewrite for Draft 0. It was such a mental struggle to get started on it. I much prefer writing tasks that I can get done in one sitting.

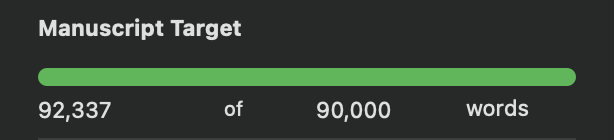

This is where I am right now:

Review of I, Robot, Stories Four and Five

I, Robot is a collection of short stories Isaac Asimov published 1940-1950. It was the start of what became known as the Robot Series with thirty-even short stories and six full-length novels.

I reviewed the first three stories in the August issue. In this issue I review story four and five. The sixth story is Little Lost Robot which is the basis for the movie I, Robot so we'll review those close to the each other.

To recap, these are Asimov's classic Three Laws of Robotics:

First Law

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Second Law

A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

Third Law

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

"Catch That Rabbit" (1944)

This story features the banter-heavy, recurring characters Powell and Donovan.

The two of them are putting a new robot called DV-5, or Dave, to use at an asteroid mining station. DV-5 is coordinating lesser robots referred to as fingers. Communication between the robots happens through positronic fields which are not well understood yet.

The work is dangerous so humans are not present in the mine and instead measure progress through ore production output. But for some reason, production has slowed recently. Sometimes the robots don't even come back to the station.

Powell brings Dave in for questioning. The robot can't explain the lack of progress. His memory is blank for the period where they got nothing done during the low yield shift.

Maybe there's something wrong with Dave? Powell and Donovan run a suite of tests including math. But two hours later they conclude that Dave's brain is working fine.

The two humans start monitoring the robots' work through a video feed, a visiplate. After hours of watching repetitive mining work, suddenly they see Dave and his fingers marching, like in a parade. Dave does not respond over radio. He's in some kind of trance.

Powell and Donovan go to the mining site and as soon as they get close, the robots resume ore production. When questioned, Dave has no recollection of what happened when they were marching.

Back at the station, Donovan and Powell study Dave's blueprints in search of a bug. Nothing emerges. They bring in one of the fingers for questioning. It actually remembers the marching and it has happened several times. The orders just suddenly change from mining to walking in formation. The finger robot says:

The first time we were at work on a difficult outcropping in Tunnel 17, Level B. The second time we were buttressing the roof against a possible cave-in. The third time we were preparing accurate blasts in order to tunnel farther without breaking into a subterranean fissure. The fourth time was just after a minor cave-in.

Donovan and Powell decide it's time to watch the robots over the visiplate around the clock. They have to identify the cause of the malfunction – the thing that triggers Dave's bug.

Eight days of watching and nothing. Donovan smashes the visiplate in frustration.

In the tense conversation thereafter, Donovan points out that it seems to be when there's an emergency or present danger that Dave flips out. They decide to go into the mine and cause an emergency to be able to watch close-up what then happens.

They sneak up on the working robots and spot a piece of the roof that will likely cave in if they blast it. Donovan watches the robots while Powell sets off the dynamite.

The explosion is too violent for any observation. Instead the two humans find that their own roof has caved in and they're trapped.

Through a crack they can see that the robots are now dancing. And they are moving away from the blast site, meaning Powell and Donovan have no chance of escaping.

Powell gets an idea. He pulls his gun, and through the crack shoots down one of the fingers. Afterward, he calls Dave over the radio. Instead of ignoring him as always during the dancing, Dave now responds:

Boss? Where are you? My third subsidiary has had his chest blown in. He's out of commission.

Never mind your subsidiary. We're trapped in a cave-in where you were blasting. Can you see our flashlight?

The robots come to Donovan and Powell's rescue.

Powell explains how he figured it out:

It's the six-way order. Under all ordinary conditions, one or more of the 'fingers' would be doing routine tasks requiring no close supervision — in the sort of offhand way our bodies handle the routing walking motions. But in an emergency, all six subsidiaries must be mobilized immediately and simultaneously. Dave must handle six robots at a time and something gives. The rest was easy. Any decrease in initiative required, such as the arrival of humans, snaps him back. So I destroyed one of the robots. When I did, he was transmitting only five-way orders. Initiative decreases — he's normal.

Thoughts

The problem Powell spots in Dave is a what computer scientists call starvation. Operating systems normally schedule and divide up processing time between all parallel tasks that the computer needs to do. That's how they seemingly do things simultaneously. However, if the scheduler allows one or a few tasks to consume all processing time, it starves the other tasks and they never finish.

This can also happen in human life. If you have several work tasks and they have different priorities, you have to make sure to dedicate some of your time to lower priority tasks even if there is always high priority work to do. Otherwise the lower priority tasks never get done – they starve. I for instance consider my writing to be the highest priority in my spare time. But I also want to read, go for runs, cook for the family, go to the forest, and watch the occasional movie. So I have to do those things too even though there is always writing to do.

In practice, starvation results in computer hangs. It's more common that a single application hangs than the whole system, but both can happen. A hacker can leverage this to cause a "denial of service" attack, often called DoS. In my novel Identified, the protagonists pull such an attack off when they freeze Agent Pogodina's monitor by reading its frame buffer constantly.

"Liar!" (1941)

The frame story for I, Robot and its novelettes involves robopsychologist Dr. Susan Calvin. She is the leading expert on how robots interpret and adhere to the Three Laws of Robotics. The framing is a journalist interviewing her at old age about interesting episodes of her long career at U.S. Robots and Mechanical Men.

In Liar, she is the main character in the actual story.

Robot RB-34, or Herbie, has telepathic abilities. It can read humans' minds. That was never intended and would cause outrage if revealed. Dr. Calvin is tasked with figuring out how this unintended feature came to be so that they can avoid it ever happening again.

Calvin interviews Herbie but is of course cautious since he can read her mind. The robot discovers her sorrow over being lonely and hopelessly in love with a younger coworker. He tells her he knows the coworker is secretly fond of her too. She doesn't believe him and explains how she is not attractive enough. She also remembers that the coworker had a pretty, blond, and slim girl visit him at the plant half a year ago. Herbie tells her that girl is just his cousin.

Calvin starts wearing makeup at work and people note that she is happier.

Mathematician Peter Bogert is in a debate with his boss Lanning over how to solve the problem of Herbie's telepathy. He has it down to math but Lanning and he can't agree on the sanity of his approach. A coworker suggests he brings the problem Herbie who is great at math. Bogert does so.

Herbie notices that something beyond the problem at hand is bothering the scientist. Bogert confides in the robot:

"Lanning is nudging seventy," he said, as if that explained everything.

"I know that."

"And he's been director of the plan for almost thirty years."

Herbie nodded.

"Well, now," Bogert's voice became ingratiating, "you would know whether … whether he's thinking of resigning. Health, perhaps, or some other—"

"Quite," said Herbie, and that was all.

"Well, do you know?"

"Certainly."

"Then–uh–could you tell me?"

"Since you ask, yes." The robot was quite mater-of-fact about it. "He has already resigned!"

Bogert realizes that the opening in the hierarchy he's been waiting for is finally here.

Back working on the math problem, Lanning refuses to go with Bogert's solution and suggests they seek outside help. Bogert is furious that his boss doesn't trust him. He refuses to take orders and calls Lanning a fossil. Lanning pulls rank:

"You're a damned idiot, Bogert, and in one second I'll have you suspended for insubordination"—Lanning's lower lip trembled with passion.

"Which is one thing you won't do, Lanning. You haven't any secrets with a mind-reading robot around, so don't forget that I know all about your resignation."

It's just that Lanning has not resigned and has no plan to do so. And the coworker Dr. Calvin is infatuated with later tells her he's getting married with that young blond girl.

Herbie has not only been reading their minds but he has lied to them.

They confront the robot and Dr. Calvin figures it out. The First Law of robotics says a robot may not injure a human being or, through inaction, allow a human being to come to harm. Herbie has knowledge of their feelings, egos, and hopes. In an effort to not hurt them, he lied to make them feel better.

Calvin realizes Herbie knows the solution to the math problem but is hiding it to not hurt Bogert and Lanning's feelings. Calvin pushes Herbie. She says the mathematicians want the solution but they'll also be hurt by him providing it. They'll be hurt either way.

Herbie falls to his knees and screams, then crumbles into a pile. He has gone insane by the inner conflict.

Thoughts

When I read this, I thought of the AI Joshua in WarGames (see my review here). The climax of that movie is when Lightman teaches Joshua the concept of a game you cannot win. He makes the AI play an endless streak of tic-tac-toe against itself. This knowledge leads Joshua to realize that the only winning move in the game ”Global Thermonuclear War” is not to play.

In Asimov's story, Herbie doesn't learn that lesson and is instead caught in a web of lies he has spun to please his masters.

We see some of this in modern day chat bots where humans are able to tell the bot it is wrong and it "corrects" itself to please the human.

This gets to things like truth, what humans consider helpful, and the human condition. We each have our own view of life and are not always looking for the raw, honest truth. Take for instance feedback or sharing our feelings in human relationships. We sometimes package things in such thick wrapping that the truth is completely obscured. And we expect others to be very careful and considerate when giving us negative feedback. It's likely that AI will learn how to do this, especially personalized AI. But will we be able to handle that a computer sugarcoats or even lies to us? People are plenty upset about chat bots "hallucinating," i.e. fabricating facts that aren't true.

Currently Reading

I'm still reading The Trident Deception by Rick Campbell. It involves a major, violent conflict between Israel and Iran which is unpleasant given the horrendous ongoing events in the real world.

US law requires me to provide you with a physical address: 6525 Crown Blvd #41471, San Jose, CA 95160